Convolutional Neural Networks (CNNs) are the backbone of modern computer vision, enabling technologies like facial recognition and self-driving cars. Their power lies in hierarchical learning, building knowledge from simple patterns to complex objects—a process rooted in cognitive complexity. This post unpacks how CNNs mimic biological vision, their layered architecture, and why this approach is so practical.

What is Cognitive Complexity?

In the context of CNNs, cognitive complexity refers to the network's ability to build sophisticated, high-level representations of the world from simple, low-level features. It's a progressive journey of understanding. The network doesn't instantly recognize a "car" in an image. Instead, it begins by identifying basic elements, such as lines, edges, and colors. It then combines these simple features to recognize more complex shapes, like circles and rectangles. Finally, it assembles these shapes to identify even more complicated objects, such as wheels and windows, which ultimately lead to the identification of a car.

The inner workings of CNNs lie in this hierarchical process. The successive layers in the network gain knowledge to identify more complex patterns based on the outputs of the prior layer. The style of learning is much to be commended as it enables the network to reuse both simple basic knowledge in the creation of complex ones that are vast in scope.

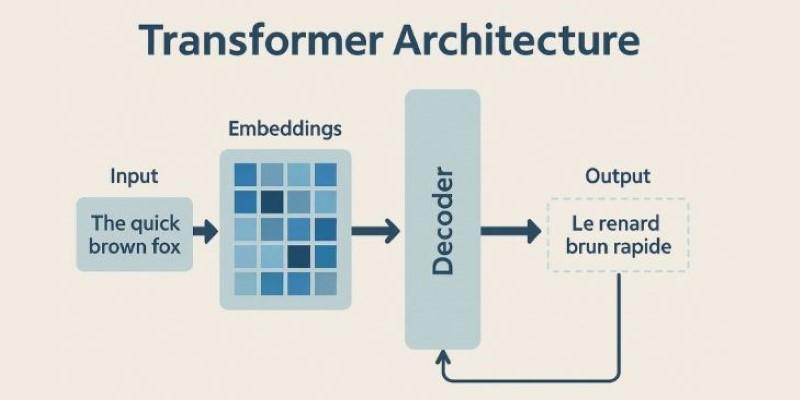

The Architecture of a Convolutional Neural Network

To get an idea of how the cognitive complexity works in practice, the simple anatomy of a CNN needs to be established. A typical CNN consists of several different types of layers, each of which performs a particular service in this process of hierarchical learning.

Convolutional Layers: The Feature Detectors

The significant aspect of a CNN is the convolutional layer. It is at this point that the magic of the feature detector strikes. These layers apply filters (also referred to as kernels) to scan an input image and discover specific patterns.

Early Layers

The filters at the network's bottom are straightforward in the first levels of the network. They are tailored to recognize such basic characteristics as horizontal, vertical, and diagonal lines, color gradients, and plain textures. When a filter sweeps over an area of the image that matches its pattern overlay, it activates, resulting in a high value at the corresponding part of its output, which is called a feature map.

Deeper Layers

The maps of features in the first stages are then entered into the following layers of convolutional layers. These lower levels incorporate more complex and intricate filters to integrate the simple attributes found at lower levels. For example, a layer could come to understand that a corner is created by forming a horizontal line together with a vertical line. Some others may cluster several circles of shapes to determine an eye or a wheel.

This sequence of convolutional layers enables the network to convert successively more abstract and complex forms, pixel by pixel at the bottom, into patterns on the next layer, and then into parts of objects on the next layer.

Pooling Layers: Reducing Complexity

Following a convolutional layer of features, one common layer follows, the pooling layer (commonly referred to as the subsampling layer). The main idea of the pooling layer is to narrow the spatial dimensions (width and height) of the feature maps.

The pooling layer reduces the number of feature maps through small-sized feature maps to:

- Decrease Computational Load: Fewer parameters mean faster processing and less memory usage.

- Create Positional Invariance: It makes the network more robust to small shifts and distortions in the input image. For example, whether a cat's eye is in the top-left or center of a small patch, the pooling layer will still signal the presence of an "eye" feature. This helps the network recognize objects regardless of their exact position.

Most of pooling is referred to as max pooling, where the top score observed on a small area of a feature map is used, and this is basically a mechanism of summarising the dominating feature in an area.

Fully Connected Layers: The Decision Makers

Once the convolution and pooling stages are completed, the high-level feature maps are reduced into a single flattened vector of numbers. This vector is then input into one or more fully connected layers that are the same as those within a conventional neural network.

It is the job of these last layers to make a decision. They accept the complicated characteristics that were detected by the convolutional layers and apply them to categorize the picture. As an example, when the feature maps show that the image corresponds to the words such as fur, whiskers, pointy ears, and a feline nose, the fully connected layers will weight the features. They will end up having a high propensity of concluding that the image sharing contains a cat.

Similarities with Biological Vision.

Parallels with Biological Vision

The hierarchies in CNNs are not a combination of chance. It is based upon the functioning of the human visual cortex. During the 1950s and 1960s, neuroscientists David Hubel and Torsten Wiesel found that the neurons of the visual cortex in mammals are arranged in a hierarchy.

- Simple Cells: They discovered that there exist responses to simple patterns such as lines of specific orientations by some of the neurons (the simple cells).

- Complex Cells: Other neurons (complex cells) respond to these lines regardless of their exact position within the receptive field. These cells receive input from multiple simple cells.

- Hypercomplex Cells: Extremely specialized cells create a response to even more complex shapes, such as corners or line ends.

This is a biological model whereby simpler visual information is integrated over time to create more complex perceptions, and this is what CNNs mimic. The computational complexity of the CNN is a reflection of the way the brain has formed itself to sense the visual world. This type of biomimicry is one of the factors that make CNNs very effective at image recognition tasks.

Why Cognitive Complexity Matters

Nintendo offers several significant benefits that assure its power and effectiveness:

Parameter Sharing

The filters are reused throughout the picture. A learning filter that learns to identify a vertical edge will be able to locate such an edge in a variety of locations, compared with a standard neural network, which requires numerous parameters to be learned.

Hierarchical Learning

With the possibility of constructing more complex features out of simpler ones, the network can learn a rich set of representations that can be generalized to different tasks. The network that has been trained to identify cats and dogs will have learned about the basic features (edges, textures, shapes) that might be repurposed to know about cars and bicycles.

Scalability

The stacked design enables CNNs to scale to more complex designs. Complex and abstract image features can be learned in deeper networks, and this usually increases the precision in attempting a more challenging visual image. In the current CNNs, there may also be hundreds of layers, each of which adds a more advanced interpretation of the input.

Conclusion

CNN's strength lies in its hierarchical structure, which mimics the human visual system. Starting with basic edge detection and progressing to complex classifications, each layer builds logically. By becoming familiar with this multifaceted process, it becomes clear that it is being demystified, allowing individuals to see or be shown the ordered systems that break down and gather visual information into sensory understandings. This is the central motive behind the continuous development of computer vision and AI.