How to Train LLMs to “Think” (o1 & DeepSeek-R1) for Smarter AI Outcomes

Learn how o1 and DeepSeek-R1 train large language models to 'think,' improving reasoning, accuracy, and smarter AI outcomes

All articles under this category.

Learn how o1 and DeepSeek-R1 train large language models to 'think,' improving reasoning, accuracy, and smarter AI outcomes

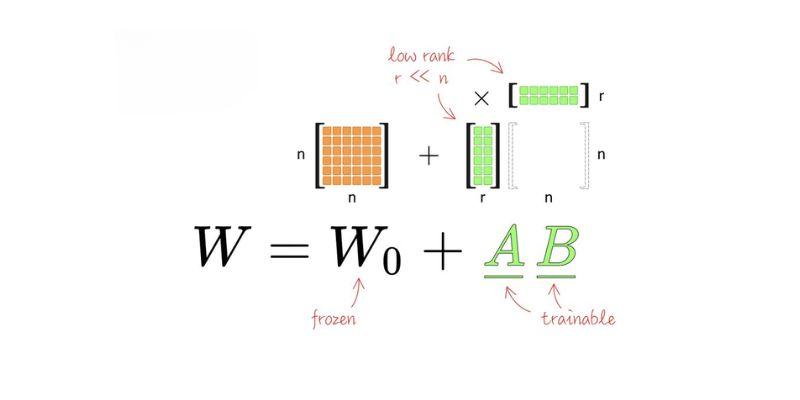

Discover how LoRA fine-tuning works, why it became popular, its limitations, and the emerging alternatives shaping LLM adaptation

Explore CatBoost, a robust machine learning solution for categorical data, offering performance optimization and reliability in production systems.

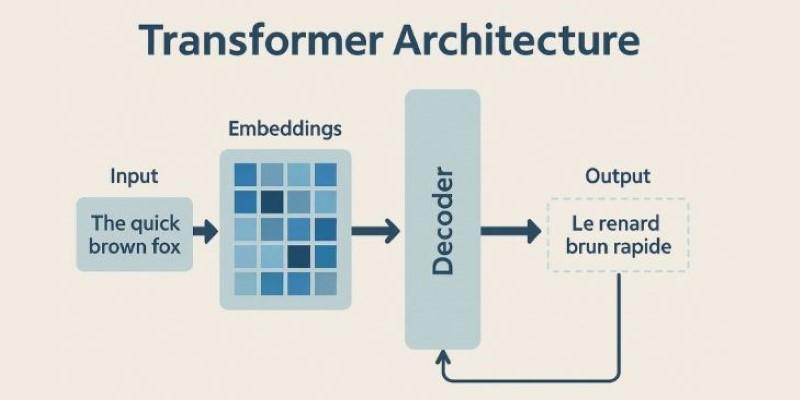

How transformer architecture powers modern AI systems by processing language more efficiently using self-attention. Learn what makes it so effective in tasks like translation, search, and text generation

How to build and deploy a RAG pipeline with a clear, practical approach. This guide walks through setup, embedding, retrieval, generation, and deployment of a retrieval-augmented generation system

Exploring EoRA strategies that refine AI efficiency through low-bit models, enabling sustainable, adaptable, and accessible AI solutions.

What ChatGPT plugins are, how they work, and how to add ChatGPT plugins to expand your AI assistant’s capabilities. A simple guide for better productivity

Google's LangExtract is a tool that helps turn messy text into clear, organized information.

Learn how to implement AI responsibly with strong governance, thorough testing, and continuous improvement to ensure ethical and effective systems.

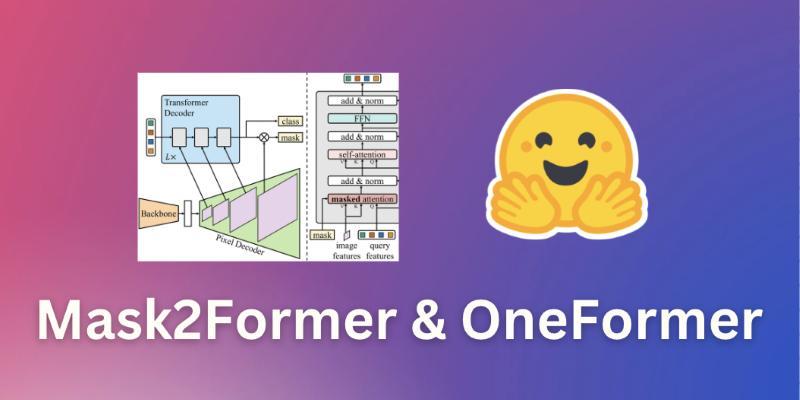

What if one model could handle every image segmentation task? Explore how Mask2Former and OneFormer are driving the shift toward universal segmentation in computer vision