Machine learning algorithms are the backbone of modern artificial intelligence. They allow systems to make predictions, discover patterns, and adapt without being directly programmed for each task. From suggesting what movie to watch to guiding medical diagnoses, these algorithms shape how technology interacts with us daily.

Understanding them doesn’t require advanced mathematics—just curiosity about how machines learn. By looking at the types of machine learning algorithms and concrete examples, we can see how they function and why they are so widely used.

Types of Machine Learning Algorithms

Machine learning algorithms are usually grouped into three categories: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning uses labeled data, where both the inputs and expected outputs are known. The goal is to build a model that can predict outcomes for new data. Common examples include Linear Regression, which predicts continuous values such as stock prices, and Support Vector Machines (SVMs), which classify data by identifying the optimal boundary between classes. These algorithms are often applied in tasks like fraud detection, sentiment analysis, and sales forecasting.

Unsupervised learning works with unlabeled data, focusing on uncovering hidden structures. One well-known approach is K-Means Clustering, which organizes data into clusters of similar items, while Principal Component Analysis (PCA) reduces the number of variables in a dataset without losing key information. These techniques are useful in fields such as market segmentation, image compression, and exploratory research, where the aim is to detect relationships not immediately visible.

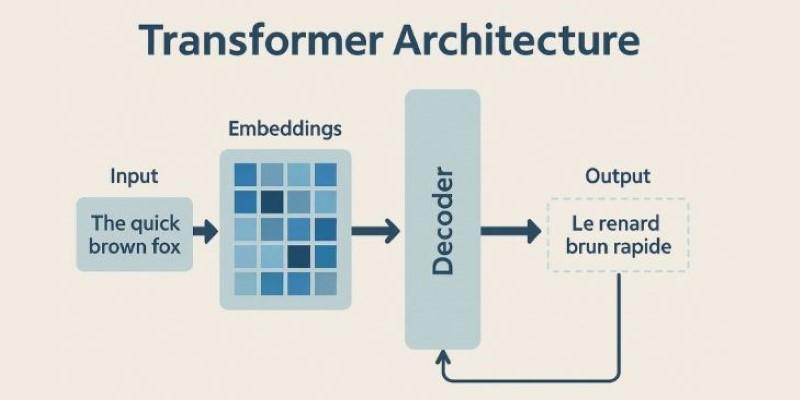

Reinforcement learning stands apart by teaching agents through interaction with an environment. The algorithm learns strategies to maximize cumulative rewards. A widely known method is Q-Learning, which guides decisions by evaluating actions based on previous rewards. In modern applications, Deep Q-Networks (DQNs) extend this principle with neural networks, allowing learning from complex data like visual inputs. This approach powers self-driving cars, robotics, and gaming systems where decisions need to adapt in real time.

Each category has different strengths. Supervised learning thrives with rich historical data, unsupervised learning uncovers structure in the unknown, and reinforcement learning adapts dynamically through trial and error.

Common Algorithms and How They Work

Beyond the categories, the actual algorithms provide a clearer picture of how machine learning works.

Decision Trees are straightforward models that split data into branches based on feature values. Each branch ends in a leaf that gives a prediction. They are easy to interpret and often used for decision-making tasks like identifying at-risk customers. A stronger variation, Random Forest, builds many decision trees and averages their outputs, producing more accurate results while reducing overfitting.

Naive Bayes, grounded in probability theory, is widely used in text classification. It assumes features are independent, which is rarely true, yet it performs well for spam filtering or sentiment detection. Its efficiency and simplicity make it a staple in natural language processing.

K-Nearest Neighbors (KNN) predicts outcomes by looking at the closest training data points to a new case. Though simple, it is effective for recommendation engines, handwriting recognition, or small datasets. Its main drawback is the computational cost during prediction, since it compares new data against all prior samples.

Gradient Boosting Machines (GBMs), including XGBoost, build models in sequence, with each new tree correcting errors from the previous ones. These ensemble methods consistently deliver high accuracy in structured data problems. They are common in credit scoring, churn prediction, and competition-winning solutions on structured datasets.

For unsupervised learning, Hierarchical Clustering offers a flexible approach, creating a hierarchy of groups that can be cut at different levels depending on the desired granularity. It is often applied in biology, such as grouping genes with similar expressions.

Dimensionality reduction techniques, such as t-SNE, are valuable for making sense of high-dimensional data. By projecting large datasets into two or three dimensions, t-SNE helps visualize natural clusters, aiding in exploratory data analysis.

Reinforcement learning continues to evolve with models such as DQNs. They merge reinforcement learning principles with deep learning, making them suitable for environments like video games or autonomous systems where inputs are too complex for simple algorithms.

Applications and Matching Algorithms to Problems

The value of these algorithms becomes clearer through real-world applications.

In finance, supervised algorithms like logistic regression or random forests predict loan defaults and detect fraudulent activity. They handle structured data effectively, making them essential for risk management. Retail businesses use supervised models to forecast demand and classify customer behavior, enabling more precise marketing strategies.

Healthcare applies machine learning across both supervised and unsupervised types. Diagnostic tools employ classification models to detect diseases, while clustering methods help uncover patterns in genetic data or group patients with similar conditions. PCA assists researchers in simplifying massive datasets while retaining crucial medical insights.

E-commerce benefits from combining methods. Recommendation systems often use collaborative filtering, an unsupervised technique, alongside regression models to predict user ratings. This blend personalizes shopping experiences by pairing user similarity with predictive scoring.

Reinforcement learning stands out in robotics and transportation. Self-driving cars need to interpret traffic, adapt to changing conditions, and make split-second decisions. Algorithms like Q-Learning and DQNs provide the flexibility to improve performance through constant feedback.

Creative industries also use machine learning, though often with more experimental approaches. Algorithms like GANs, which combine principles from multiple categories, generate realistic art, music, and even video. While not the focus here, these innovations show how algorithms expand beyond traditional analytics into areas of creativity.

The choice of algorithm depends not only on the data but also on the problem. Sometimes, practitioners test several models, comparing performance using validation techniques before selecting the most suitable. The quality of the results often relies as much on the match between problem and method as on the algorithm itself.

Final Thoughts

Machine learning algorithms range from simple decision trees to advanced reinforcement systems, each with its place. Some predict outcomes, others group unlabeled data, and some learn strategies through interaction. What connects them is their capacity to improve by processing information, finding patterns, and refining predictions over time. As machine learning continues to influence daily life, understanding the types of machine learning algorithms is becoming more important, not just for developers but for anyone interested in how technology shapes decisions. Knowing which algorithm to use is the first step toward building smarter systems.