Retrieval-Augmented Generation (RAG) is becoming a preferred approach for many developers looking to combine the strengths of search and generative models. The appeal lies in its ability to fetch relevant information from external data sources and blend that into the output of a large language model. Instead of relying solely on what the model has learned during training, a RAG pipeline can pull live or domain-specific data to provide grounded and more accurate responses.

It's especially useful in scenarios where factual correctness and domain alignment matter. Think of documentation tools, internal assistants, or search-enabled chatbots. This guide walks you through building and deploying a working RAG pipeline, providing practical steps and minimal fluff.

Setting Up the Core Components

Before writing a single line of code, it's helpful to understand the three major components that make up a RAG pipeline: the retriever, the embedding model, and the generator.

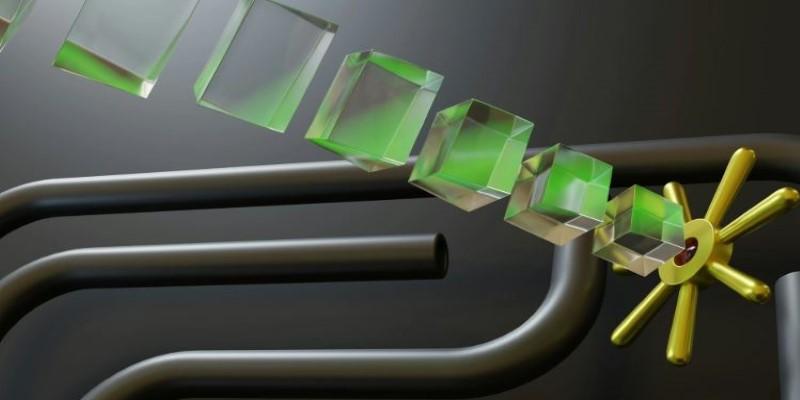

The retriever is responsible for finding relevant information from a corpus of documents. This is typically done using vector search. Each document or chunk of text is turned into an embedding—a numerical representation of its content—and stored in a vector database. When a user poses a question, the retriever converts it into an embedding as well and finds the most similar documents by comparing vectors.

The embedding model is used to convert both documents and queries into embeddings. Popular choices include OpenAI's text-embedding models, HuggingFace’s sentence-transformers, or models from Cohere. The quality of embeddings directly affects retrieval performance, so this isn't something to choose lightly. Depending on your needs, you may prefer smaller, faster models or larger ones that better capture semantic meaning.

The generator is typically a large language model such as GPT-4, LLaMA, or Claude. It takes the retrieved documents and the original query, then generates a response that blends the retrieved content into the answer. Prompt design plays a big role here. If the documents are stuffed in without structure or context, even the best models can struggle. A good prompt gives the model clear instructions, marks where context begins, and often includes formatting guides.

To get started, choose a stack that fits your project. You’ll need:

- A document source (text files, PDFs, scraped content, etc.)

- A text splitter to break content into chunks (like LangChain’s RecursiveCharacterTextSplitter)

- An embedding model to convert text to vectors

- A vector database like FAISS, Weaviate, or Pinecone

- A retrieval wrapper

- A language model API or local deployment

- Some glue code to manage the flow between parts

Building the RAG Pipeline Step by Step

Once you’ve selected your tools, the first phase is preprocessing. Most documents aren’t ready to be used as-is. You’ll want to clean them, remove headers or boilerplate, and split them into overlapping chunks that preserve meaning. Overlapping ensures that answers spanning two chunks aren’t lost in between. Text splitters usually offer ways to set chunk size and overlap, which you’ll need to tune depending on model input limits.

Next, generate embeddings for each chunk and store them in your vector store. If using a hosted service, you'll often get an API to upload documents and retrieve top-k matches. If using FAISS locally, you’ll index vectors and handle retrieval in your code. Either way, this is a one-time cost unless your documents change frequently.

Now it’s time to set up retrieval. When the user inputs a query, it’s passed through the same embedding model. The retriever then returns the closest document chunks. These become the context for the generator. Prompt formatting is key here. A template like:

Answer the following question based on the context provided. If the answer is not in the context, say "I don't know."

Context:

[chunk1]

[chunk2]

...

Question: [user question]

Answer:

This makes expectations clear to the model and reduces hallucination. Depending on your choice of model, you may need to manage token limits carefully, trimming the number of documents or chunk size.

Finally, feed the formatted prompt to the generator. Whether you use an API or a local model, wrap the call in error handling and logging to catch failures or latency spikes. This completes your basic RAG loop: ingest, embed, retrieve, generate.

Deploying and Optimizing Your RAG System

Once you’ve confirmed your pipeline works locally, the next step is to move it into production. This means thinking about APIs, scaling, and monitoring.

Most deployments wrap the RAG pipeline inside an API endpoint. Frameworks like FastAPI (for Python) make this easy. Define a single POST route that accepts user questions, runs them through your pipeline, and returns the generated answer. Keep latency in mind here. If your retrieval takes too long or your model generates slowly, responses will lag. Caching, batching, and async calls can help.

For deployment, containerization is standard. Build a Docker image that contains all your dependencies, and then deploy it to a cloud platform or a container service, such as Kubernetes. If you're using local models, be sure your deployment environment has enough memory and compute. For hosted models, check your usage quotas and API rate limits.

Monitoring is another important piece. You'll want to log every user query, which documents were retrieved, and what the model produced. This helps trace bad outputs and spot where the retrieval failed. You can also add feedback hooks to collect user ratings and improve relevance over time.

One challenge many teams face is latency. Embedding, retrieval, and generation all take time. You can speed this up by:

- Using faster embedding models

- Lowering top-k retrieved chunks

- Running components in parallel

- Pruning or filtering documents based on metadata before retrieval

Don’t forget about evaluation. RAG pipelines can fail silently, returning answers that look plausible but aren’t supported by the documents. Periodic checks using ground-truth answers or manual audits are useful. Some teams even simulate questions over their dataset to test performance.

Final Thoughts

A Retrieval-Augmented Generation pipeline combines external data with large language models to deliver accurate, grounded responses. It reduces hallucinations and outdated knowledge by retrieving relevant documents, embedding them, and guiding the generator with context. Building one involves document preparation, embeddings, retrieval, and generation. Deployment focuses on speed and stability, making RAG ideal for assistants, search tools, and knowledge systems as the technology continues to evolve.