OpenAI has released a cutting-edge AI-powered video creation tool. With just basic text prompts, it produces lifelike videos in a matter of seconds. Experts caution about the risks of the OpenAI’s video tool that could transform the digital world, although many see it as a breakthrough. The powerful tool can create realistic images, but it can also be harmful if used improperly.

The dissemination of deepfakes, fake news, and identity theft are among the risks tied to AI-generated videos. Public opinion manipulation and privacy concerns are expanding quickly. These problems demonstrate the need for laws and moral standards. This article examines security risks, ethical issues with OpenAI video creation, and the need for stringent regulations to govern this technology to prevent it from falling into the wrong hands.

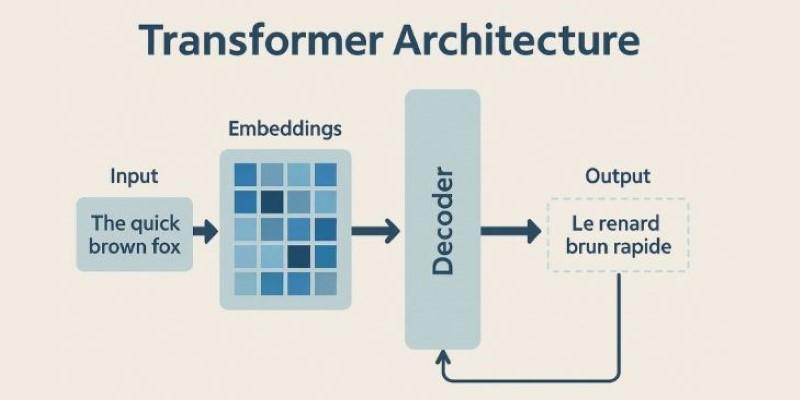

How OpenAI’s AI Video Generator Works

OpenAI’s video generator uses deep learning models trained on massive datasets. It creates realistic-looking videos from text instructions. To match the description, the system applies visual elements, processes language, and interprets context. Both natural animations and motion effects are supported. Because of this, it can be used for entertainment, education, and advertising.

However, responsible use is called into question by such capability. Without filming, anyone can produce videos that look professional. It implies that content that looks authentic can be created without a legitimate source. The risks linked to this AI-driven video tool stem from its unrestricted creative ability. Knowing how it functions explains how misuse can occur. Regulation is urgently needed worldwide because a single prompt can produce false evidence or misleading content in a matter of seconds.

Major Dangers of AI Video Generation

There are significant and increasing dangers of AI video generation. Deepfake videos, which convincingly mimic real people, pose a serious risk. They have the power to ruin reputations and destabilize the political system. When phony videos appear authentic, misinformation spreads more quickly. Criminals could use these videos for blackmail and scams. Financial fraud, in which phony authorizations dupe businesses, is another risk.

Because AI creates realistic-looking videos, these threats are difficult to identify. As fake videos proliferate, trust in digital content is eroding. These risks include social as well as technical difficulties. Fake videos will undermine personal security, business if they are not stopped. Experts concur that without quick preventive action, these risks are more difficult to manage due to the rapidity of AI development.

Ethical Concerns in AI Video Creation

The emergence of AI-generated videos raises ethical concerns. One major ethical issue with OpenAI video creation is consent. Fake videos may contain people’s voices or faces without their consent. That violates personal rights and privacy. Accountability is another problem. Who bears responsibility when a damaging video is produced?

Because AI tools don’t always track users, it’s frequently impossible to identify the creator. Videos have the power to mislead viewers and distort reality, even when they are used for amusement. Ethical standards must ensure fairness. In their absence, society runs the risk of normalizing dishonesty. Safety and ethics must be given top priority by companies creating these tools. Ignoring ethical issues could have serious and long-lasting effects on communication, culture, and trust in all facets of digital life.

Security and Privacy Threats from AI Videos

Personal privacy and security are also among the risks associated with this advanced AI-powered video system. AI videos can be used by criminals to get around identity verification. Fake videos can fool security checks and biometric systems. Hackers may pose as business executives to authorize data transfers or payments. Massive financial losses may result from such fraud. AI-generated content also raises the risk of phishing, in which users are tricked into disclosing personal information by phony videos.

Featuring someone in a video without permission is a clear invasion of their privacy. These videos have the potential to cause emotional distress and damage reputations. Social media companies struggle to prevent the rapid spread of false information. Experts in cybersecurity caution that new defenses are required. Without robust protections, misuse of AI videos could turn into a global security calamity that poses a threat to both people and businesses.

Why Regulations Are Urgently Needed

To reduce the serious risks linked to AI-generated videos, regulations are essential. The intricacy of AI tools is not covered by the antiquated laws in place. Governments must establish strict regulations for content verification. Users may find it easier to identify AI-generated videos if watermarks are required. Public trust also depends on developers being transparent. Without these safeguards, criminals can overuse technology without worrying about facing consequences.

Because online content travels across borders, international cooperation is essential. These regulations ought to be based on privacy and consent laws. Strict internal policies must also be implemented by organizations such as OpenAI. Delays in regulations could cause irreversible harm. The unchecked misuse of AI video generators can only be prevented by robust legislation and technological safeguards.

Challenges in Stopping AI Video Misuse

The speed and accessibility of AI videos make it challenging to prevent their misuse. Anyone with an internet connection can use these tools. Fake videos are nearly impossible to remove once they start to circulate. Social media sites struggle to spot manipulated content promptly. Though they are getting better, AI detection systems are still unable to keep up with the volume of newly created content.

Compared to the speed at which fake videos are produced, legal actions are slow. Disinformation is more dangerous since people tend to believe what they see. To lessen harm, awareness and education are essential. Watermarks and user verification are examples of safety features that developers must incorporate. Governments, tech companies, and communities around the world will need to work together to combat misuse. Without this work, the risks will continue to increase and seriously undermine public confidence.

Conclusion:

Although AI-driven video technology presents enormous possibilities, it also opens the doors to major issues. Misuse results in privacy breaches affecting people and institutions, as well as false information, fraud, and privacy violations. To work, solutions need strict rules, open practices, and cooperation between developers and governments. Using this new idea in a responsible way will determine its future. In a rapidly changing digital world, prioritizing truth and authenticity requires balancing progress with strong safeguards to preserve trust, security, and ethics.