If you’ve ever found yourself curious about what makes those so-called “fancy” neural networks tick, you’re not alone. Plenty of folks think these systems are out of reach unless you have a doctorate or a full team of data scientists in your basement. The truth is, you can get quite far just by keeping things straightforward and focusing on the basics. Let’s take a clear look at what you actually need to think about.

Understanding the Basics Before You Start

Let’s get one thing straight right off the bat: you don’t have to know everything about neural networks before building one. But there are some essentials that will save you hours of frustration down the line.

Keep Your Goal Crystal Clear

Before anything else, figure out what you want your network to do. Are you recognizing pictures of cats? Predicting next week’s weather? If you don’t know the question, there’s no sense in looking for the answer. This is your starting line. If you keep your objective simple, all your later decisions get easier.

Don’t Overcomplicate the Data

A common trap is thinking you need mountains of complicated data to build a good model. Not true. Start small. Try working with just a few features (those columns in your spreadsheet). Sometimes, two or three good features work better than twenty messy ones. Also, check for missing values, weird outliers, and data that doesn’t make sense—these will trip you up every time.

Understand Your Input and Output

Think about what’s going in and what you want coming out. Is your network looking at images, numbers, or text? And what do you want from it—a “yes” or “no,” a number, a label? If you line this up early, you won’t end up tangled later.

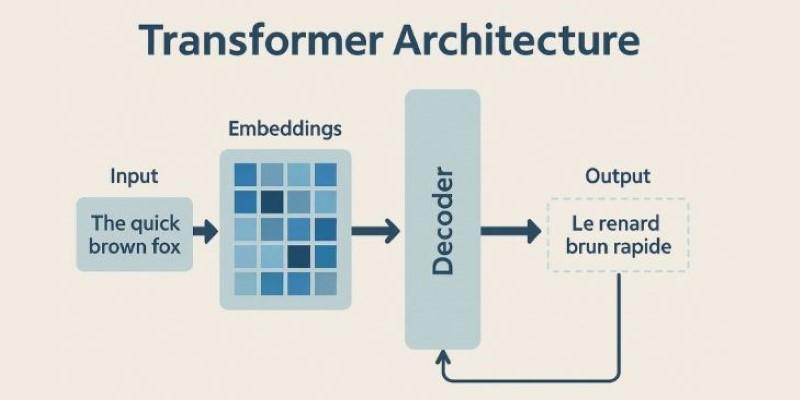

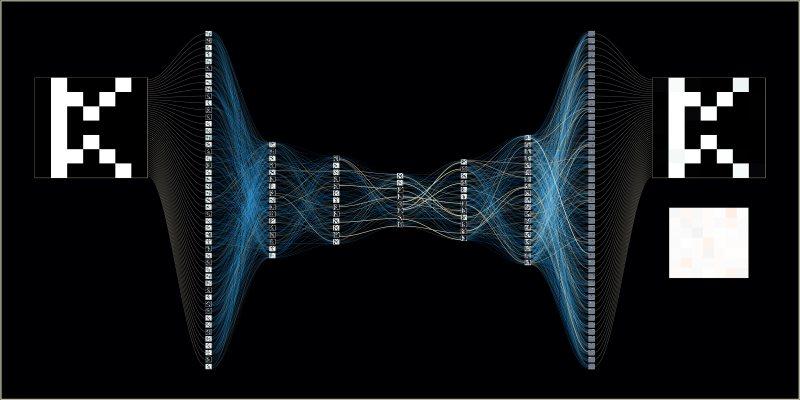

Choosing the Right Architecture—Don’t Sweat It

When people say “fancy neural network,” images of deep and complicated models probably come to mind. But here’s a little secret: most problems don’t need fifty layers and a wild architecture. Keep it simple unless you have a very specific reason to do otherwise.

Start with the Basics

The classic feedforward network—the bread and butter of neural nets—handles a surprising number of problems well. You might hear about convolutional or recurrent layers, but if you don’t need them, skip them. When in doubt, start small. A network with just one or two hidden layers is more than enough for most beginner projects.

Pick a Reasonable Size

It’s easy to fall for the “bigger is better” myth. Sure, those big networks in research papers have thousands of neurons and layers, but they also need massive computers and weeks of time. For most practical cases, a compact network with just the right number of neurons in each layer will do. Think of it like picking shoes: you don’t grab the biggest pair in the store, you choose what fits.

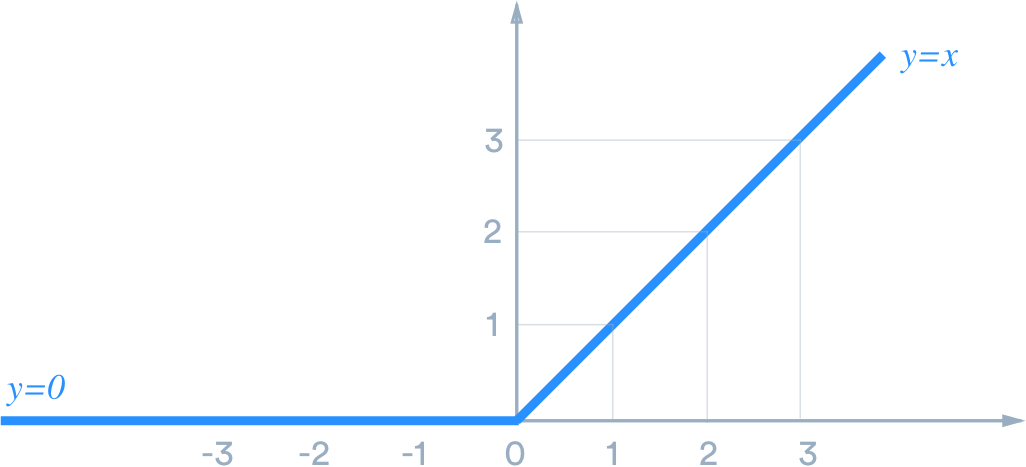

Don’t Ignore Activation Functions

This might sound technical, but it’s just about how your network decides what “signal” to pass on. For almost every case, a function called “ReLU” (rectified linear unit) gets the job done. Stick with it, unless you run into a specific situation where it doesn’t work. There’s no need to get creative here.

The Nuts and Bolts of Training: Keep it Clean and Simple

Training your network is where the magic happens, but it can also be where the headaches start. If you keep things tidy and logical, you’ll avoid most problems.

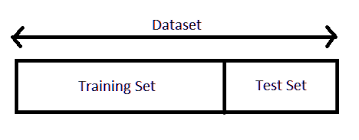

Split Your Data Early

Before you even start training, set aside some data for checking your network’s work. This is called your “test set.” Use one portion to train, another to check your progress. Never let your network see the answers from the test set during training. This single habit will stop you from fooling yourself about your results.

Watch Out for Overfitting

Ever studied so much for a test that you memorize every question, but can’t answer anything new? That’s what happens to neural networks if you aren’t careful. If your network starts getting perfect scores on your training data but stumbles on new data, it’s learned the training set too well and can’t generalize. To avoid this, don’t use more layers or neurons than you need, and always test on data your network hasn’t seen before.

Don’t Be Afraid to Adjust as You Go

Neural networks are not set-it-and-forget-it machines. If something isn't working, try changing the number of neurons or maybe the way your data is arranged. Most successful builders try a few tweaks, check the results, and adjust again. There's no magic formula—just clear thinking and patience.

Step-by-Step: Building Your First Fancy Neural Network

Step 1: Define Your Problem Clearly

Ask yourself: What do I want my network to achieve? Write it down in one sentence.

Step 2: Collect and Clean Your Data

Gather the data you need. Remove anything that looks off or doesn’t belong. Handle any missing values, and make sure you know what each column represents.

Step 3: Choose Your Input and Output

Decide what information goes into your network and what kind of answer you expect. Double-check that these line up with your goal.

Step 4: Split Your Data

Take your dataset and set aside a portion (often 20%) to check your network’s answers later. Never use this for training.

Step 5: Pick a Simple Model

Start with a basic feedforward network. Limit the number of hidden layers to one or two, and don’t overload each layer with neurons.

Step 6: Select the Activation Function

Stick with the ReLU function for hidden layers and a sensible choice for your output (like softmax for categories, or none for simple numbers).

Step 7: Train and Check

Let your network learn from your training data, but always keep an eye on your test data. If you see a big gap between your training and test results, try reducing the network’s complexity.

Step 8: Adjust as Needed

If things don’t work out, tweak one thing at a time. Add or remove neurons, shuffle your data, or try a different training approach. See what changes.

Wrapping It All Up

Building a neural network doesn’t have to be complicated. Focus on the basics: know your goal, keep your data clean, start small, and only get fancy if the problem demands it. With a steady approach, you’ll find yourself making progress without feeling lost in the weeds. And who knows? That “fancy” neural network might turn out to be a lot simpler than you imagined.